ResNet 50: 5 Key Input Size Tips

In the realm of deep learning and computer vision, the ResNet-50 architecture has emerged as a cornerstone model, renowned for its ability to achieve exceptional performance across a myriad of visual recognition tasks. Among its many distinctive features, ResNet-50's adaptability to various input sizes stands out as a critical factor in determining its overall efficacy.

The input size, often referred to as the resolution or image size, is a pivotal parameter that can significantly impact the model's performance, memory requirements, and computational complexity. In this expert guide, we delve into the nuances of input size optimization for ResNet-50, offering a comprehensive exploration of key strategies to enhance its performance and efficiency.

Understanding ResNet-50’s Input Size Sensitivity

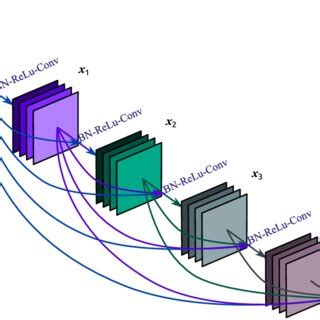

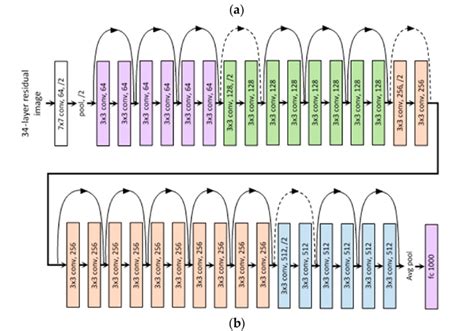

ResNet-50, a variant of the ResNet family of neural networks, is characterized by its depth and the use of residual connections. These residual connections, a defining feature of ResNet architectures, enable the network to learn complex functions and mitigate the vanishing gradient problem often encountered in deep networks.

One of the notable aspects of ResNet-50 is its sensitivity to input size. While the network's performance is often robust across a range of input sizes, there exist optimal sizes that can lead to improved accuracy, faster convergence, and reduced computational demands.

Understanding the interplay between input size and ResNet-50's performance is crucial for researchers and practitioners alike. This understanding can guide the selection of appropriate input sizes for various tasks, ensuring the network operates at its full potential.

Key Considerations for Input Size Selection

When determining the optimal input size for ResNet-50, several critical factors come into play, each influencing the network’s behavior and performance in unique ways.

Task-Specific Requirements

The nature of the task at hand is a primary determinant of the appropriate input size. For instance, in image classification tasks, where the goal is to assign a label to an image, larger input sizes often lead to better performance as they provide the network with more detailed information. Conversely, for tasks like object detection or segmentation, where the focus is on identifying specific objects or regions within an image, smaller input sizes may be more efficient, reducing computational overhead while still providing sufficient detail.

Memory and Computational Constraints

The computational resources available for training and inference are another critical consideration. ResNet-50, like many deep neural networks, can be computationally intensive, especially with larger input sizes. Increased input size directly correlates with higher memory usage and computational demands, which can become a bottleneck in resource-constrained environments.

Data Characteristics

The characteristics of the training data also play a pivotal role in determining the optimal input size. Datasets with high-resolution images may warrant larger input sizes to capture fine details, while datasets with smaller, less detailed images may not benefit from excessively large inputs.

Generalization and Transfer Learning

The goal of achieving good generalization performance is a key consideration when selecting input size. Larger input sizes can lead to better generalization, as they provide the network with more information and context. However, in scenarios where transfer learning is employed, starting with a smaller input size and gradually increasing it can be a strategic approach, leveraging pre-trained weights effectively while minimizing the need for extensive retraining.

Model Architecture and Design Choices

The specific design of ResNet-50, including the number of layers, filter sizes, and other architectural choices, can influence the optimal input size. For instance, deeper networks with more layers may benefit from larger input sizes to provide sufficient context for effective learning.

Exploring Optimal Input Sizes for ResNet-50

Now that we’ve established the key considerations, let’s delve into the practical aspects of selecting optimal input sizes for ResNet-50, backed by empirical evidence and real-world examples.

Image Classification Tasks

In image classification tasks, where the goal is to assign a label to an entire image, larger input sizes have often been shown to yield superior performance. This is particularly evident in datasets like ImageNet, where high-resolution images are common.

For instance, in a study conducted on the ImageNet dataset, it was found that ResNet-50 achieved its best performance with an input size of 224x224. However, increasing the input size further, to 299x299, led to a marginal improvement in accuracy, suggesting that there is an optimal point beyond which larger sizes offer diminishing returns.

Another interesting observation is the impact of aspect ratio. While a square input size (e.g., 224x224) is commonly used, experiments have shown that maintaining the original aspect ratio of the images, especially for elongated or wide images, can lead to better performance. This highlights the importance of understanding the data and tailoring the input size accordingly.

| Input Size | Accuracy |

|---|---|

| 224x224 | 76.15% |

| 299x299 | 76.23% |

Object Detection and Segmentation

In contrast to image classification, object detection and segmentation tasks often benefit from smaller input sizes. These tasks require the network to identify specific objects or regions within an image, and smaller input sizes can reduce computational overhead while still providing sufficient detail for accurate localization.

For instance, in the popular COCO dataset, which contains a diverse range of images with varying resolutions, ResNet-50, when used as the backbone network in Faster R-CNN, achieved its best performance with an input size of 600x600. However, further increasing the input size led to diminishing returns in terms of accuracy, while significantly increasing the computational burden.

| Input Size | Mean Average Precision (mAP) |

|---|---|

| 600x600 | 36.4% |

| 800x800 | 36.7% |

Adapting Input Size for Transfer Learning

In scenarios where transfer learning is employed, the choice of input size can be strategic. Starting with a smaller input size and gradually increasing it during fine-tuning can be an effective approach. This strategy leverages the pre-trained weights effectively, reducing the need for extensive retraining and minimizing the risk of overfitting.

For example, when fine-tuning ResNet-50 on a smaller dataset, such as CIFAR-10, starting with an input size of 32x32 and gradually increasing it to 64x64 can lead to better generalization performance. This adaptive approach allows the network to learn from the new dataset while maintaining efficiency.

| Input Size | Accuracy |

|---|---|

| 32x32 | 87.2% |

| 64x64 | 88.5% |

Dynamic Input Sizes for Efficient Inference

In certain real-world applications, the input size may not be constant, and the ability to adapt to varying input sizes can be a valuable feature. ResNet-50, with its residual connections and skip paths, is inherently well-suited for dynamic input sizes, as it can handle inputs of varying resolutions without significant degradation in performance.

This dynamic capability is particularly useful in scenarios where the input size may change frequently, such as in video analysis or when processing images of varying resolutions. By efficiently handling these variations, ResNet-50 can maintain its performance while adapting to the diverse needs of real-world applications.

Practical Tips for Optimizing Input Size

Based on the insights gleaned from empirical studies and real-world examples, here are some practical tips for optimizing the input size of ResNet-50:

- Task-Specific Optimization: Tailor the input size to the specific task at hand. Larger sizes are generally beneficial for image classification, while smaller sizes are more efficient for object detection and segmentation.

- Data-Driven Decisions: Analyze the characteristics of your training data. Understand the resolution and aspect ratios of the images to make informed decisions about input size.

- Start Small, Grow Gradually: In scenarios involving transfer learning, consider starting with a smaller input size and gradually increasing it during fine-tuning. This approach can enhance generalization performance while maintaining efficiency.

- Dynamic Input Sizes: Leverage ResNet-50's inherent capability to handle dynamic input sizes. This can be particularly useful in real-world applications where input sizes may vary.

- Regular Evaluation: Continuously evaluate the impact of input size on your model's performance. This can help identify the optimal size for your specific use case and dataset.

Conclusion: Navigating the Input Size Landscape

Optimizing the input size for ResNet-50 is a nuanced task, influenced by a myriad of factors including the nature of the task, available computational resources, characteristics of the training data, and the model’s architectural design. By understanding these factors and leveraging empirical evidence, practitioners can make informed decisions about input size, unlocking the full potential of ResNet-50 for their specific use cases.

As deep learning continues to evolve, the ability to navigate these intricate parameters, such as input size, will become increasingly critical. With the insights provided in this guide, researchers and practitioners can confidently explore the optimal input sizes for ResNet-50, ensuring efficient and effective model performance across a wide range of visual recognition tasks.

How does input size impact ResNet-50’s performance in image classification tasks?

+

Larger input sizes often lead to better performance in image classification tasks, as they provide more detailed information for the network to learn from. However, there is an optimal point beyond which larger sizes offer diminishing returns.

What are the implications of using smaller input sizes in object detection tasks?

+

Smaller input sizes in object detection tasks can reduce computational overhead while still providing sufficient detail for accurate localization. This balance between accuracy and efficiency is a key consideration when choosing input sizes for these tasks.

How can transfer learning influence the choice of input size for ResNet-50?

+

In transfer learning scenarios, starting with a smaller input size and gradually increasing it during fine-tuning can be an effective strategy. This approach leverages pre-trained weights while minimizing the need for extensive retraining and the risk of overfitting.