Deciphering XGBoost's Scores: 5 Insights

XGBoost, an advanced machine learning algorithm, has revolutionized the world of predictive modeling and decision-making. Its ability to process vast datasets and deliver accurate predictions has made it a go-to tool for data scientists and analysts worldwide. However, interpreting XGBoost's scores can be a complex task, as it involves understanding the intricate workings of this powerful algorithm.

In this article, we will delve into the world of XGBoost scores, offering five expert insights to help you decipher their meaning and implications. By the end of this journey, you'll have a deeper understanding of how XGBoost operates and how to interpret its results, enabling you to leverage this tool more effectively in your data-driven endeavors.

Understanding XGBoost’s Core Principles

XGBoost, short for Extreme Gradient Boosting, is an ensemble learning algorithm that combines multiple weak learners (decision trees) to create a strong predictive model. It is built upon the foundation of gradient boosting, an approach that constructs an additive model in a stage-wise manner, allowing it to optimize for specific objectives.

The algorithm's strength lies in its ability to handle large datasets efficiently, tackle complex problems, and minimize overfitting through regularization techniques. By utilizing a tree-based model, XGBoost can capture non-linear relationships and interactions between variables, making it a versatile tool for a wide range of predictive tasks.

The Significance of XGBoost Scores

XGBoost scores, often referred to as prediction scores or probability estimates, are the output of the algorithm’s predictive model. These scores represent the likelihood of a particular event or class occurring, based on the input features provided to the model.

Understanding these scores is crucial, as they provide valuable insights into the model's performance and decision-making process. By interpreting these scores, data scientists can gain a deeper understanding of the underlying patterns and relationships within the data, leading to more accurate predictions and informed decision-making.

Interpreting XGBoost Scores: A Step-by-Step Guide

Interpreting XGBoost scores involves a systematic approach, ensuring a thorough understanding of the model’s predictions. Here’s a step-by-step guide to help you decipher these scores:

1. Define the Problem and Objective

Before interpreting XGBoost scores, it’s essential to clearly define the problem you’re trying to solve and the objective of your predictive model. Whether it’s a classification or regression task, understanding the problem context is crucial for accurate interpretation.

2. Evaluate Model Performance

Assessing the performance of your XGBoost model is a critical step. This involves calculating various performance metrics such as accuracy, precision, recall, F1-score, or mean squared error, depending on the nature of your problem. These metrics provide insights into how well the model is performing and help identify potential areas for improvement.

For example, in a binary classification problem, you might calculate the area under the ROC curve (AUC-ROC) to evaluate the model's ability to distinguish between the two classes. A high AUC-ROC value indicates a well-performing model, while a low value suggests room for improvement.

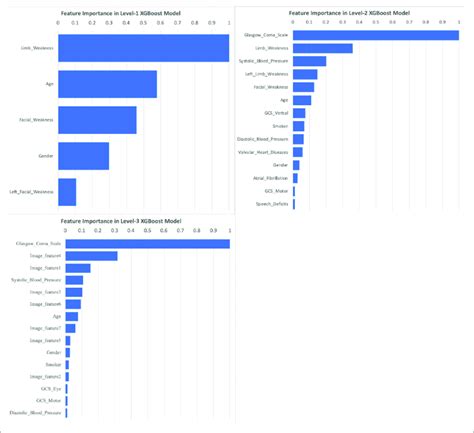

3. Analyze Feature Importance

XGBoost provides a built-in feature importance analysis, which ranks the input features based on their contribution to the model’s predictions. By examining these importance scores, you can gain insights into which features are most influential in the model’s decision-making process.

For instance, if you're building a model to predict customer churn, you might find that customer satisfaction and tenure are the most important features, indicating their significant impact on the likelihood of churn. This knowledge can help you prioritize your business strategies and allocate resources effectively.

4. Visualize the Decision Trees

XGBoost’s ensemble model is composed of multiple decision trees. Visualizing these trees can provide valuable insights into the model’s decision-making process. By examining the structure and paths of the trees, you can understand how different features interact and contribute to the final prediction.

For instance, you might observe that the model makes a prediction based on a combination of customer age and purchase history. This visualization can help you identify potential biases or errors in the model and guide further refinement.

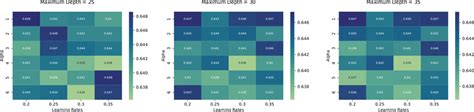

5. Validate and Optimize

Once you’ve gained insights from the previous steps, it’s essential to validate your findings and optimize the model. This involves conducting thorough testing, cross-validation, and fine-tuning of the model’s hyperparameters to ensure its reliability and accuracy.

For instance, you might discover that the model is overfitting to a particular subset of the data. By adjusting the regularization parameters and resampling techniques, you can mitigate overfitting and improve the model's generalization performance.

Advanced Techniques for Interpreting XGBoost Scores

While the basic steps outlined above provide a solid foundation for interpreting XGBoost scores, advanced techniques can further enhance your understanding and enable more nuanced interpretations.

1. Partial Dependence Plots (PDPs)

Partial Dependence Plots visualize the marginal effect of a feature on the predicted outcome, averaging over the values of all other features. By creating PDPs for each feature, you can gain insights into how changes in a particular feature impact the model’s predictions, independent of the other features.

For instance, in a credit scoring model, a PDP might show that as a customer's credit score increases, their likelihood of default decreases. This insight can help identify critical thresholds or ranges where the model's predictions change significantly.

2. Individual Conditional Expectation (ICE) Plots

ICE plots, similar to PDPs, visualize the effect of a feature on the predicted outcome. However, unlike PDPs, ICE plots show the effect for individual instances rather than an average across all instances. This allows you to understand how the feature impacts predictions for specific instances, providing a more detailed and personalized view.

For example, in a medical diagnosis model, an ICE plot might show that for a particular patient, an increase in a specific biomarker level leads to a higher probability of disease. This personalized insight can guide more targeted treatment plans.

3. Feature Interaction Analysis

XGBoost’s ability to capture complex interactions between features is a significant strength. Analyzing these interactions can provide deeper insights into the underlying relationships within the data. Feature interaction analysis involves examining how changes in one feature impact the effect of another feature on the predicted outcome.

For instance, in a model predicting housing prices, an interaction analysis might reveal that the effect of property size on price is amplified when the property is located in a desirable neighborhood. This insight can guide real estate agents in pricing strategies and help buyers make informed decisions.

Real-World Applications and Case Studies

XGBoost’s versatility and accuracy have led to its widespread adoption across various industries. Let’s explore some real-world applications and case studies to understand how XGBoost scores are interpreted and utilized in practice.

1. Healthcare: Predicting Disease Risk

In the healthcare industry, XGBoost is used to predict disease risk and identify high-risk patients. By interpreting XGBoost scores, healthcare providers can prioritize resources, develop targeted prevention strategies, and provide personalized treatment plans.

For example, a healthcare organization might use XGBoost to predict the risk of developing diabetes based on patient demographics, lifestyle factors, and medical history. By understanding the model's predictions, they can identify individuals at high risk and offer tailored interventions to prevent or manage the disease.

2. Finance: Credit Risk Assessment

In the financial sector, XGBoost is employed to assess credit risk and make lending decisions. Interpreting XGBoost scores helps lenders evaluate the likelihood of default, optimize loan terms, and minimize financial losses.

Consider a bank using XGBoost to predict loan defaults based on borrower characteristics and historical data. By interpreting the model's scores, the bank can identify high-risk borrowers, adjust loan amounts and interest rates accordingly, and develop strategies to mitigate default risks.

3. Marketing: Customer Segmentation and Personalization

XGBoost is a powerful tool for customer segmentation and personalized marketing strategies. By interpreting XGBoost scores, marketers can identify distinct customer segments, understand their preferences and behaviors, and tailor their marketing efforts accordingly.

For instance, an e-commerce company might use XGBoost to segment customers based on their purchase history, browsing behavior, and demographic information. By interpreting the model's scores, they can create targeted marketing campaigns, recommend personalized products, and enhance the overall customer experience.

Future Trends and Innovations in XGBoost

As XGBoost continues to evolve, several trends and innovations are shaping its future. Here’s a glimpse into the potential advancements that could enhance its capabilities and interpretations.

1. Explainable AI and Model Transparency

There is a growing emphasis on developing explainable AI models that provide transparent insights into their decision-making processes. XGBoost, with its tree-based structure, already offers a certain level of interpretability. However, ongoing research aims to further enhance its transparency and make its predictions more understandable to both experts and non-experts.

2. Integration with Deep Learning

The integration of XGBoost with deep learning techniques is an emerging trend. By combining the strengths of tree-based models with neural networks, researchers aim to create more powerful and accurate predictive models. This integration could lead to enhanced interpretability, as deep learning models can provide additional insights into complex patterns and relationships.

3. Advancements in Regularization Techniques

Regularization techniques play a crucial role in preventing overfitting and improving the generalization performance of XGBoost models. Ongoing research focuses on developing more advanced regularization methods, such as adaptive regularization, which could enhance the model’s ability to handle complex datasets and provide more accurate interpretations.

Conclusion

XGBoost’s scores are a powerful tool for data-driven decision-making, offering insights into the underlying patterns and relationships within complex datasets. By understanding and interpreting these scores, data scientists and analysts can unlock the full potential of this advanced machine learning algorithm.

Through a combination of basic and advanced techniques, real-world applications, and ongoing innovations, XGBoost continues to push the boundaries of predictive modeling. As we move forward, the insights gained from XGBoost scores will play a pivotal role in shaping industries, driving innovation, and guiding strategic decisions.

How does XGBoost handle missing data?

+XGBoost handles missing data gracefully by employing a technique called “missing value imputation.” It assigns default values to missing entries based on the most common or median value in the dataset. This approach ensures that the model can process incomplete data effectively without compromising its performance.

What are some common challenges when working with XGBoost?

+Common challenges include overfitting, especially when dealing with small datasets. Additionally, XGBoost’s tree-based structure can make it challenging to interpret complex interactions between features. Proper hyperparameter tuning and regularization techniques are essential to mitigate these challenges.

Can XGBoost handle categorical variables?

+Yes, XGBoost can handle categorical variables effectively. It uses one-hot encoding or label encoding techniques to convert categorical data into a format suitable for tree-based models. This allows the model to capture the unique relationships and patterns associated with categorical features.