4 Steps to Convert Normal Maps

Converting normal maps is a crucial process in the field of 3D graphics and rendering, especially when dealing with complex models and textures. This technique allows artists and developers to enhance the visual quality of their creations by adding intricate details and depth. The process of converting normal maps involves several steps, each requiring precision and an understanding of the underlying principles of 3D graphics. In this article, we will delve into the world of normal map conversion, exploring the intricacies and offering a comprehensive guide to achieving professional results.

Understanding Normal Maps

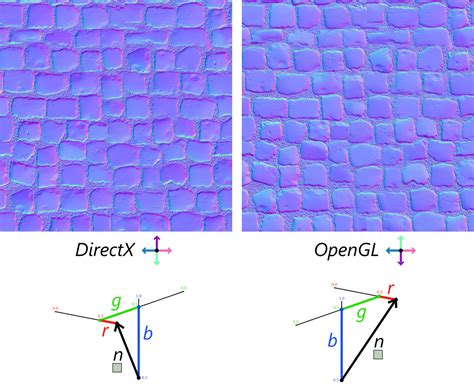

Before diving into the conversion process, it’s essential to grasp the concept of normal maps and their significance in 3D graphics. Normal maps are a type of texture map used to simulate the lighting and surface details of a 3D model. Unlike traditional textures, which store color information, normal maps store surface normals, which are vectors that define the orientation of each point on a model’s surface. These normals play a crucial role in determining how light interacts with the model, creating the illusion of depth and complexity.

Normal maps are particularly useful for adding intricate details to low-polygon models, as they can mimic the appearance of high-resolution surfaces without increasing the model's polygon count. This makes them an invaluable tool for optimizing 3D assets, especially in real-time applications such as video games and interactive simulations.

Step 1: Prepare the Base Texture

The first step in converting normal maps is to prepare the base texture that will serve as the foundation for the normal map. This texture typically represents the color or albedo of the surface, providing the visual context for the normal map’s details.

- Select an Appropriate Texture: Choose a high-quality texture with a suitable resolution. The texture should have a good level of detail and accurately represent the surface you wish to convert.

- Adjust Texture Properties: Ensure the texture's color space is appropriate for your workflow. Most 3D applications require textures to be in sRGB color space. Convert the texture if necessary to maintain color accuracy.

- Optimize Texture Size: Consider the size of the texture relative to the model's size. Overly large textures can increase file size and processing time, while excessively small textures may result in noticeable pixelation. Aim for a balance between detail and performance.

Advanced Tip: Texture Baking

In some cases, especially for complex models, it may be beneficial to bake additional texture information onto the base texture. This process, known as texture baking, involves projecting certain attributes, such as ambient occlusion or cavity maps, onto the texture. These additional details can enhance the realism and complexity of the normal map conversion.

| Texture Type | Description |

|---|---|

| Ambient Occlusion | Simulates self-shadowing and creases, adding depth and realism. |

| Cavity Map | Highlights areas of indentation and protrusion, useful for detailed normal map conversion. |

Step 2: Generate the Normal Map

With the base texture prepared, the next step is to generate the normal map itself. This process involves several techniques, each suited to different scenarios and desired outcomes.

- Photogrammetry: For highly detailed and realistic normal maps, photogrammetry is an excellent choice. This technique involves capturing multiple photographs of a real-world object or scene and using specialized software to reconstruct the object's surface normals. Photogrammetry can produce incredibly accurate normal maps, but it requires a controlled environment and careful photography.

- Normal Map Filters: Many 3D applications offer built-in normal map filters that can convert color textures into normal maps. These filters use algorithms to interpret the color information and generate surface normals. While less accurate than photogrammetry, these filters are quick and convenient for basic normal map generation.

- Custom Normal Map Generation: Advanced users may opt to create custom normal maps using image editing software. This process involves manually manipulating the RGB channels of an image to represent surface normals. While time-consuming, this method offers precise control over the normal map's details.

Optimizing Normal Map Quality

Regardless of the generation method, several techniques can enhance the quality of the normal map:

- High-Quality Filters: If using built-in filters, choose high-quality options that offer greater control over the normal map's intensity and detail.

- Post-Processing: Apply additional post-processing effects to the normal map, such as sharpening or noise reduction, to enhance its clarity and detail.

- Normal Map Compression: To reduce file size and improve performance, consider compressing the normal map using specialized compression algorithms. This can be especially beneficial for real-time applications.

Step 3: Optimize and Test

Once the normal map has been generated, it’s crucial to optimize and test it to ensure it meets the desired quality standards and performs well in its intended application.

- Normal Map Resolution: Evaluate the normal map's resolution in relation to the model's size and detail. Ensure the normal map is not overly large, as this can impact performance. Downscale the normal map if necessary while maintaining its visual quality.

- Normal Map Format: Choose an appropriate file format for the normal map. Common formats include .TGA, .DDS, and .PNG, each with its own advantages and compatibility considerations.

- Test in Real-Time: If the normal map is intended for real-time applications, test it in the target engine or software. Pay attention to performance, especially on lower-end hardware, to ensure the normal map doesn't negatively impact frame rates.

Performance Optimization Techniques

To further optimize performance, consider the following techniques:

- Mipmapping: Generate mipmaps for the normal map to improve rendering efficiency, especially at different viewing distances.

- Normal Map Packing: Combine multiple normal maps into a single texture to reduce the number of texture lookups and improve performance.

- Level of Detail (LOD): Implement LOD systems to dynamically adjust the normal map's detail based on the viewer's proximity to the model.

Step 4: Integrate and Refine

With the normal map optimized and tested, the final step is to integrate it into the 3D model and refine its appearance.

- Assign the Normal Map: Apply the normal map to the model's material or shader, ensuring it is correctly mapped and aligned with the base texture.

- Adjust Normal Map Strength: Fine-tune the normal map's intensity to achieve the desired level of detail and depth. This can be done through material settings or by adjusting the normal map's RGB values.

- Additional Textures: Consider adding other texture maps, such as specular or roughness maps, to further enhance the model's appearance and realism.

Advanced Integration Techniques

For more advanced users, several techniques can take normal map integration to the next level:

- Parallax Mapping: Implement parallax mapping to add an extra layer of depth and complexity to the normal map, especially in areas with intricate details.

- Screen-Space Reflections: Use screen-space reflections to simulate reflections on the model's surface, enhancing its visual fidelity.

- Subsurface Scattering: For organic materials like skin or translucent objects, consider implementing subsurface scattering to accurately simulate the scattering of light beneath the surface.

Conclusion

Converting normal maps is a powerful technique that allows artists and developers to add intricate details and depth to their 3D models. By following these four steps—preparing the base texture, generating the normal map, optimizing and testing, and integrating and refining—you can achieve professional-quality results. Remember that each step requires careful consideration and experimentation to find the optimal settings for your specific project and desired outcome.

How do normal maps improve visual quality in 3D graphics?

+Normal maps simulate surface details and lighting, adding depth and complexity to low-polygon models without increasing polygon count. This enhances visual fidelity and realism.

What is the best method for generating normal maps?

+The best method depends on the desired outcome and available resources. Photogrammetry offers high accuracy but requires specialized equipment, while normal map filters are quick and convenient for basic conversions.

How can I optimize normal map performance in real-time applications?

+To optimize performance, consider techniques like mipmapping, normal map packing, and LOD systems. These methods reduce texture lookups and adjust detail based on viewing distance, improving overall efficiency.