The Ultimate Guide: 10 Probability Laws

The Intriguing World of Probability Laws: Navigating Uncertainty with Confidence

Probability laws, often referred to as axioms or rules, are the fundamental principles that govern the mathematical study of uncertainty. These laws provide a framework for understanding and quantifying the likelihood of events, forming the backbone of probability theory and its numerous applications across diverse fields. From physics and finance to machine learning and data science, a solid grasp of probability laws is essential for making informed decisions and predictions in an uncertain world.

In this comprehensive guide, we delve into ten cornerstone probability laws, exploring their significance, applications, and real-world implications. By unraveling the intricacies of these laws, we aim to empower readers with a deeper understanding of probability theory and its role in shaping our decisions and interpreting data.

1. The Axiom of Sample Space

The first and most fundamental law in probability theory is the axiom of sample space. This law establishes the foundation for all probability calculations by defining the set of all possible outcomes, known as the sample space. The sample space, denoted as Ω, encompasses every conceivable outcome of an experiment or event.

For example, consider the act of tossing a fair six-sided die. The sample space for this experiment consists of six possible outcomes: {1, 2, 3, 4, 5, 6}. Each outcome represents a unique face of the die. By clearly defining the sample space, we establish the scope of our analysis and ensure that all possible outcomes are considered in our probability calculations.

The axiom of sample space is a critical concept that underpins all probability theory. It provides a systematic approach to identifying and organizing the set of all possible outcomes, allowing for precise and consistent probability calculations.

2. The Axiom of Probability

The second cornerstone law is the axiom of probability, which assigns a numerical value to each outcome in the sample space. This value, known as the probability, represents the likelihood or chance of an event occurring. The axiom of probability asserts that the probability of any event must be a non-negative real number and that the sum of probabilities for all outcomes in the sample space equals 1.

In our die-tossing example, the probability of each outcome is 1⁄6, as there are six equally likely outcomes. Therefore, the sum of probabilities for all six outcomes is 1, satisfying the axiom of probability.

The axiom of probability provides a quantitative framework for expressing uncertainty. It allows us to assign numerical values to events, enabling us to make informed decisions and predictions based on the likelihood of outcomes.

3. The Law of Total Probability

The law of total probability, also known as the law of alternatives, is a powerful tool for calculating the probability of an event when there are multiple possible outcomes. This law states that the probability of an event A can be calculated by considering all possible outcomes in the sample space and summing the probabilities of event A occurring given each outcome.

For instance, consider a bag containing red, blue, and green marbles. If we want to calculate the probability of randomly drawing a red marble, we must consider all possible outcomes (drawing a red, blue, or green marble) and their respective probabilities. The law of total probability allows us to aggregate these probabilities to obtain the overall probability of drawing a red marble.

The law of total probability is particularly useful when dealing with complex systems or scenarios with multiple possible outcomes. It enables us to break down the problem into simpler components, making probability calculations more manageable.

4. The Law of Conditional Probability

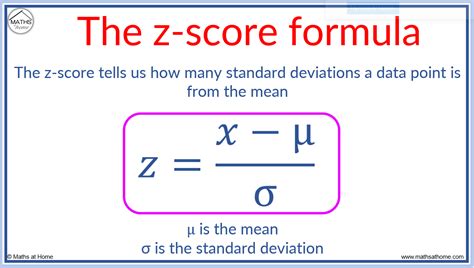

Conditional probability is a fundamental concept in probability theory, representing the probability of an event occurring given that another event has already occurred. The law of conditional probability establishes a relationship between the probability of an event A and the probability of event A given event B.

In our marble example, if we know that a marble was drawn and it turned out to be red, the conditional probability of drawing a red marble given that it is red is 1, as it is certain. However, the conditional probability of drawing a red marble given that it is not red is 0, as it is impossible.

Conditional probability allows us to refine our understanding of events by considering additional information or constraints. It is a powerful tool for analyzing complex systems and making predictions based on specific conditions or prior knowledge.

5. The Multiplication Rule

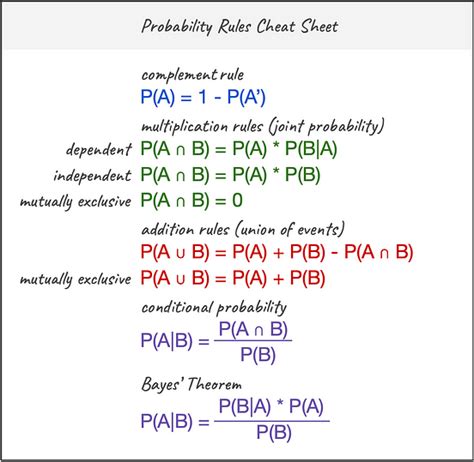

The multiplication rule, also known as the product rule, is a fundamental law for calculating the probability of the intersection of two or more events. This rule states that the probability of the intersection of events A and B is equal to the product of the probability of event A and the conditional probability of event B given event A.

For example, consider a deck of cards. If we want to calculate the probability of drawing a king and then drawing a heart, we use the multiplication rule. The probability of drawing a king is 4⁄52 (as there are four kings in a standard deck), and the conditional probability of drawing a heart given that we have drawn a king is 13⁄51 (as there are 13 hearts remaining). The multiplication rule gives us the probability of both events occurring: (4⁄52) * (13⁄51) = 1⁄221.

The multiplication rule is a powerful tool for calculating joint probabilities and understanding the relationship between multiple events. It allows us to model complex scenarios and make predictions based on the interplay of different factors.

6. The Addition Rule

The addition rule, also known as the sum rule, is another fundamental law in probability theory. This rule states that the probability of the union of two or more events is equal to the sum of their individual probabilities minus the probability of their intersection.

In our card-drawing example, if we want to calculate the probability of drawing either a king or a heart, we use the addition rule. The probability of drawing a king is 4⁄52, and the probability of drawing a heart is 13⁄52. The probability of drawing both a king and a heart is 1⁄221 (as calculated using the multiplication rule). Therefore, the probability of drawing either a king or a heart is (4⁄52) + (13⁄52) - (1⁄221) = 16⁄52 = 4⁄13.

The addition rule is essential for understanding the likelihood of events that are not mutually exclusive. It allows us to calculate the probability of multiple events occurring simultaneously and provides insights into the overall likelihood of various outcomes.

7. Bayes’ Theorem

Bayes’ theorem, named after the Reverend Thomas Bayes, is a fundamental concept in probability theory that allows us to update our beliefs or predictions based on new information or evidence. This theorem provides a mathematical framework for calculating the conditional probability of an event given new data or evidence.

Bayes’ theorem is particularly useful in situations where prior knowledge or beliefs play a significant role in our predictions. For example, in medical diagnostics, Bayes’ theorem is used to calculate the probability of a patient having a particular disease given the results of a diagnostic test.

Bayes' theorem is a powerful tool for updating our understanding of events based on new information. It has broad applications in fields such as machine learning, statistics, and decision-making, where prior knowledge and evidence are crucial for making accurate predictions.

8. The Law of Large Numbers

The law of large numbers is a fundamental principle in probability theory that asserts that as the number of independent trials or observations increases, the average of the results will converge to the expected value. In other words, over a large number of trials, the observed outcomes will closely match the theoretical probabilities.

For example, consider the act of flipping a fair coin. If we flip the coin a single time, we might observe a heads or a tails. However, if we flip the coin a large number of times, the proportion of heads to tails will tend to approach 50%, which is the expected probability of heads.

The law of large numbers is a cornerstone of statistical inference and probability theory. It provides a foundation for understanding the behavior of random variables and justifies the use of statistical methods to make reliable estimates and predictions based on large samples.

9. The Central Limit Theorem

The central limit theorem is a powerful concept in probability theory that describes the behavior of the sum or average of a large number of independent, identically distributed random variables. This theorem states that as the number of random variables increases, the distribution of their sum or average tends to approximate a normal distribution, regardless of the original distribution of the individual variables.

For instance, consider rolling a fair six-sided die multiple times and calculating the average of the outcomes. As the number of rolls increases, the distribution of the average will tend to follow a normal distribution, even though the individual rolls follow a uniform distribution.

The central limit theorem is a fundamental result in probability theory with wide-ranging applications. It underpins many statistical methods and allows us to make reliable inferences and predictions based on the properties of the normal distribution, even when the underlying data does not follow a normal distribution.

10. The Law of Iterated Expectations

The law of iterated expectations, also known as the tower rule or the law of total expectation, is a fundamental concept in probability theory that relates to the expectation or average value of a random variable. This law states that the expectation of the conditional expectation of a random variable is equal to the expectation of the original random variable.

For example, consider a random variable X representing the income of a randomly selected individual. The law of iterated expectations states that the expected value of the conditional expectation of X given some additional information Y is equal to the expected value of X itself.

The law of iterated expectations is a powerful tool for understanding the relationship between expectations and conditional expectations. It allows us to analyze the behavior of random variables under different conditions and make informed predictions based on conditional expectations.

Conclusion

Probability laws are the building blocks of probability theory, providing a robust framework for understanding and quantifying uncertainty. From the fundamental axioms of sample space and probability to the powerful concepts of conditional probability, Bayes’ theorem, and the central limit theorem, these laws empower us to make informed decisions and predictions in a wide range of domains.

By mastering these ten probability laws, we gain a deeper understanding of the mathematical foundations of uncertainty and gain the tools to navigate the complexities of real-world scenarios. Whether in finance, machine learning, or everyday decision-making, probability laws are indispensable for making sense of the world around us and shaping our understanding of likelihood and chance.

Probability laws are the cornerstone of probability theory, offering a structured approach to understanding uncertainty. By grasping these laws, we unlock the ability to analyze complex systems, make informed predictions, and navigate the intricacies of probability in diverse fields.

What is the primary purpose of probability laws?

+Probability laws serve as the foundational principles of probability theory, providing a systematic framework for understanding and quantifying uncertainty. They enable us to make informed decisions, predictions, and analyses based on the likelihood of events, making them essential in fields ranging from science and engineering to finance and data science.

<div class="faq-item">

<div class="faq-question">

<h3>How do probability laws relate to real-world applications?</h3>

<span class="faq-toggle">+</span>

</div>

<div class="faq-answer">

<p>Probability laws are applied across diverse domains to model and analyze complex systems and phenomena. From predicting stock market trends and weather patterns to optimizing machine learning algorithms and designing reliable communication networks, probability laws provide the mathematical foundation for understanding and managing uncertainty in real-world scenarios.</p>

</div>

</div>

<div class="faq-item">

<div class="faq-question">

<h3>Are probability laws universally applicable across all domains?</h3>

<span class="faq-toggle">+</span>

</div>

<div class="faq-answer">

<p>While probability laws provide a general framework for understanding uncertainty, their specific applications may vary across different domains. For example, in physics, probability laws are used to model particle interactions, while in finance, they are employed to assess risk and make investment decisions. The context and specific problem at hand determine the most appropriate probability laws to apply.</p>

</div>

</div>

<div class="faq-item">

<div class="faq-question">

<h3>How can I learn more about probability laws and their applications?</h3>

<span class="faq-toggle">+</span>

</div>

<div class="faq-answer">

<p>To delve deeper into probability laws and their applications, consider exploring advanced textbooks on probability theory and statistics. Online resources, such as tutorial websites and MOOCs (Massive Open Online Courses), can also provide valuable insights and practical examples. Additionally, engaging with the work of renowned statisticians and probability theorists can offer a wealth of knowledge and inspiration.</p>

</div>

</div>

</div>