Mastering GPT-2: The Input Attention Mask

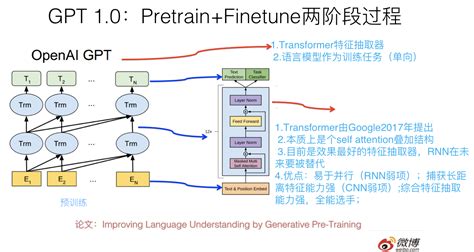

In the world of natural language processing, the GPT-2 model has gained significant attention for its remarkable capabilities in generating human-like text. As researchers and developers delve deeper into the intricacies of this powerful language model, understanding the role of the input attention mask becomes crucial. The input attention mask, often overlooked, plays a vital role in shaping the output of GPT-2, influencing the context and coherence of the generated text. In this comprehensive guide, we will delve into the intricacies of the input attention mask, exploring its purpose, functionality, and impact on GPT-2's performance. By the end of this article, you will have a deep understanding of this essential component and its implications for text generation.

Unveiling the Input Attention Mask

The input attention mask, an integral component of the transformer architecture, serves as a guiding mechanism for the attention mechanism within GPT-2. It acts as a filter, determining which parts of the input sequence are relevant for the model’s attention at each step of the generation process. By masking certain tokens or words, the attention mechanism can focus on specific segments of the input, ensuring a more precise and contextually aware generation.

Imagine the input attention mask as a spotlight, illuminating the most pertinent information for the model to consider. This selective attention allows GPT-2 to capture long-range dependencies and maintain coherence across the generated text. Without the input attention mask, the model might struggle to differentiate between important and irrelevant tokens, leading to less accurate and coherent outputs.

The Mechanics of Attention

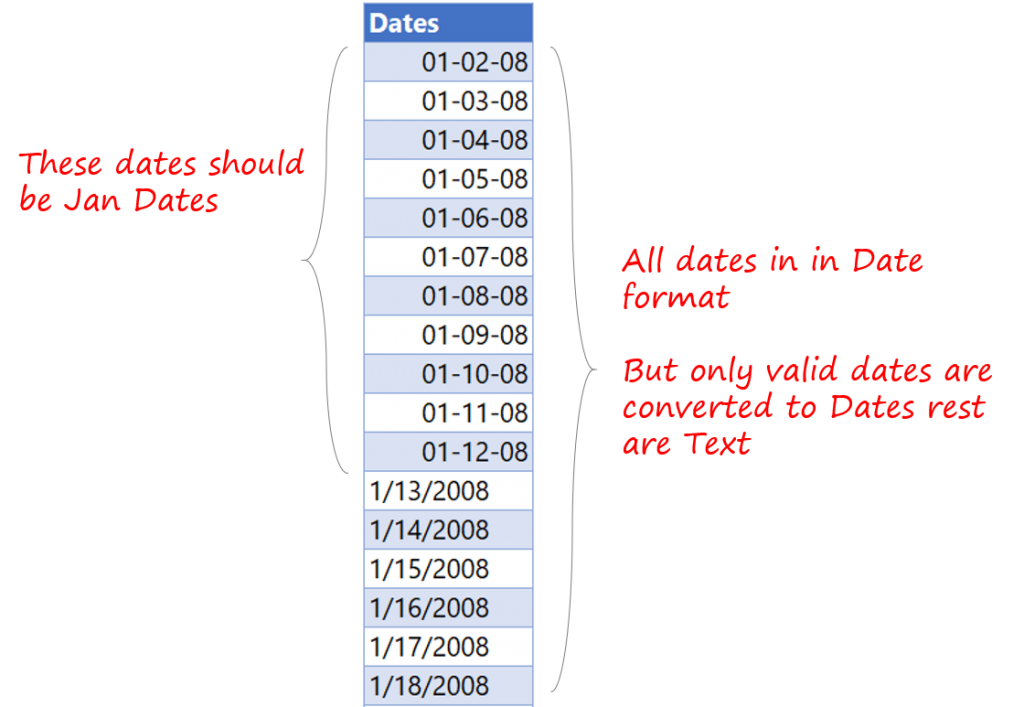

At its core, the attention mechanism within GPT-2 operates by assigning weights to each token in the input sequence. These weights determine the level of importance assigned to each token when generating the next word. The input attention mask influences this weighting process by zeroing out the weights of masked tokens, effectively removing them from the model’s consideration.

Let's consider an example. Suppose we have an input sentence: "The quick brown fox jumps over the lazy dog." If we want the model to focus on the word "fox" as a key entity, we can apply an input attention mask that highlights this word. As a result, when generating the subsequent words, the model will give more weight to the context surrounding "fox," ensuring its role as a central entity in the sentence.

| Input Sentence | Input Attention Mask |

|---|---|

| "The quick brown fox jumps over the lazy dog." | "The quick brown fox jumps over the lazy dog." |

| "The quick brown fox jumps over the lazy dog." | "[MASK] [MASK] brown fox jumps [MASK] the lazy dog." |

In the masked sentence, the attention mechanism will focus on the word "fox" and its surrounding context, allowing GPT-2 to generate a more meaningful and contextually appropriate continuation.

Enhancing Contextual Awareness

The input attention mask empowers GPT-2 to excel in various natural language processing tasks by enhancing its contextual awareness. By selectively attending to specific tokens, the model can capture nuanced relationships between words, resulting in more accurate and coherent text generation.

Capturing Long-Range Dependencies

One of the strengths of the input attention mask lies in its ability to capture long-range dependencies within the input sequence. In natural language, the meaning of a sentence often depends on words that are distant from each other. The attention mechanism, guided by the input attention mask, can establish connections between these distant tokens, ensuring a more holistic understanding of the text.

For instance, consider the sentence: "John, who lives in Seattle, is a fan of the Seahawks." The attention mechanism, with the help of the input attention mask, can identify the connection between "John" and "Seahawks" even though they are separated by several words. This contextual understanding allows GPT-2 to generate more accurate and contextually relevant text, such as "John, a passionate Seattle resident, supports the local football team."

Maintaining Coherence

Coherence is a critical aspect of natural language generation, and the input attention mask plays a crucial role in maintaining it. By selectively attending to relevant tokens, the model can ensure that the generated text remains consistent with the overall context. This is especially important in longer sequences or when generating complex narratives.

Imagine a story generation task where the model is prompted with the sentence: "The brave knight ventured into the dark forest." Without an input attention mask, the model might lose track of the context and generate incoherent sentences like: "The forest was filled with mysterious creatures, but the knight ignored them and continued on his journey."

However, with an appropriate input attention mask, the model can focus on the key elements of the story, such as the knight and the forest. This ensures that the generated text remains aligned with the initial context, producing a coherent continuation like: "The knight, undeterred by the eerie silence, pressed forward, his sword drawn, ready to face the unknown dangers lurking within."

Fine-Tuning GPT-2 with Input Attention Masks

The power of the input attention mask extends beyond its role in text generation. It also plays a crucial part in the fine-tuning process of GPT-2, allowing developers to adapt the model to specific tasks and domains.

Task-Specific Attention Masks

Different natural language processing tasks may require the model to focus on different aspects of the input. For instance, in a sentiment analysis task, the model might need to pay more attention to words expressing emotions, while in a named entity recognition task, it should focus on identifying proper nouns.

By designing task-specific input attention masks, developers can guide the model's attention towards the relevant tokens, improving its performance on the desired task. This flexibility allows GPT-2 to excel in a wide range of applications, from question answering to text summarization.

Domain Adaptation

The input attention mask also proves invaluable when adapting GPT-2 to specific domains or industries. Each domain has its own unique language and terminology, and the attention mechanism can be fine-tuned to prioritize these domain-specific tokens.

For example, in the medical domain, the attention mask can be designed to focus on medical terms and concepts, ensuring that the model generates accurate and domain-appropriate text. Similarly, for legal documents, the attention mask can be tailored to highlight legal terminology and phrases, enhancing the model's understanding of the complex legal language.

Real-World Applications

The impact of the input attention mask extends beyond the research lab, finding practical applications in various industries.

Content Generation

One of the most prominent applications of GPT-2 is content generation. From generating creative writing pieces to crafting personalized product descriptions, the input attention mask ensures that the generated content aligns with the desired context and style. This capability has revolutionized content creation, offering businesses and writers a powerful tool to enhance their creative processes.

Conversational AI

In the realm of conversational AI, the input attention mask plays a crucial role in maintaining coherent and contextually relevant responses. Chatbots and virtual assistants, powered by GPT-2, can generate more engaging and natural conversations by selectively attending to the user’s input. This enhances the user experience, making interactions with AI systems feel more human-like.

Language Translation

The input attention mask also finds its place in language translation tasks. By guiding the attention mechanism to focus on the source language’s key elements, GPT-2 can generate more accurate and fluent translations. This application has the potential to bridge language barriers and facilitate global communication.

Challenges and Future Directions

While the input attention mask has proven to be a powerful tool, there are still challenges to be addressed. One of the primary concerns is the trade-off between focus and context. While the attention mask can enhance the model’s focus on specific tokens, it may also limit its ability to consider the broader context.

Researchers are exploring innovative techniques to strike a balance between focus and context, such as dynamic attention masks that adapt to the input sequence or multi-head attention mechanisms that consider multiple aspects of the input simultaneously. These advancements aim to further enhance the capabilities of GPT-2 and other transformer-based models.

Additionally, as GPT-2 and similar models continue to evolve, ethical considerations become increasingly important. The responsible use of these powerful language models, especially in sensitive domains like healthcare or finance, requires careful attention to potential biases and ethical implications. Researchers and developers must work together to ensure that the benefits of these technologies are harnessed responsibly.

Conclusion

The input attention mask, a seemingly simple component, holds immense power in shaping the output of GPT-2 and other transformer-based models. By guiding the attention mechanism, it enhances the model’s contextual awareness, captures long-range dependencies, and maintains coherence in generated text. Its applications span across content generation, conversational AI, and language translation, revolutionizing the way we interact with natural language processing technologies.

As we continue to explore the capabilities of GPT-2 and its variants, the input attention mask will remain a crucial element, driving advancements and improvements in natural language processing. By understanding its role and implications, researchers and developers can harness its potential to create even more powerful and versatile language models.

How does the input attention mask differ from other attention mechanisms in GPT-2?

+The input attention mask specifically influences the attention mechanism by determining which tokens the model should focus on during the generation process. Other attention mechanisms, such as self-attention or cross-attention, focus on capturing relationships between tokens, while the input attention mask acts as a filter to guide this process.

Can the input attention mask be dynamically adjusted during generation?

+Yes, the input attention mask can be dynamically updated based on the generated text. This allows the model to adapt its focus as the context evolves during generation. Dynamic attention masks enable the model to capture evolving relationships and maintain coherence in longer sequences.

Are there any limitations to the use of input attention masks?

+While input attention masks are powerful, they may sometimes oversimplify the attention process, potentially overlooking subtle relationships between tokens. Additionally, designing effective attention masks requires a deep understanding of the task and the data, making it a challenging task for developers.