Hypothesis Testing: 3 Crucial Tips

Hypothesis testing is a fundamental concept in statistics and data analysis, forming the basis for many scientific discoveries and informed decision-making. This methodical approach allows researchers and analysts to draw meaningful conclusions from data, enabling them to accept or reject initial assumptions and theories. While the principles of hypothesis testing may seem straightforward, mastering this technique is crucial for accurate interpretation of data and its implications. In this article, we will delve into three critical tips to enhance your understanding and application of hypothesis testing, providing you with a more nuanced perspective on this powerful analytical tool.

Understanding the Hypothesis Testing Process

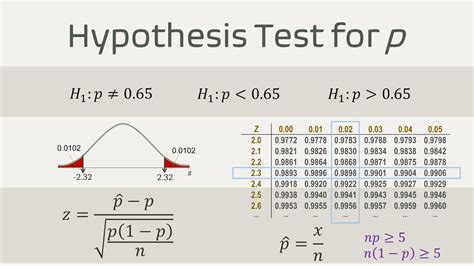

At its core, hypothesis testing involves a systematic process of evaluating two competing statements about a population, known as the null hypothesis (H0) and the alternative hypothesis (Ha or H1). The null hypothesis represents the status quo or the default assumption, often stating that there is no significant effect or relationship between variables. On the other hand, the alternative hypothesis suggests a potential deviation from the null, indicating a meaningful effect or relationship. The objective of hypothesis testing is to determine whether the data provides sufficient evidence to reject the null hypothesis in favor of the alternative.

This process is underpinned by statistical inference, which enables researchers to make educated guesses about a larger population based on a sample. By collecting data from a subset of the population and analyzing it using statistical techniques, researchers can make inferences about the entire population with a certain level of confidence. This confidence is expressed through the concept of statistical significance, which quantifies the likelihood that any observed effect is due to chance rather than a genuine relationship.

The hypothesis testing process typically involves the following steps:

- Formulating the Hypotheses: Clearly state the null and alternative hypotheses based on the research question or objective.

- Collecting Data: Gather relevant data through surveys, experiments, or observations, ensuring the data is representative of the population.

- Choosing an Appropriate Test: Select a statistical test that aligns with the nature of the data and the research question. Common tests include t-tests, chi-square tests, and analysis of variance (ANOVA) tests.

- Setting the Significance Level: Determine the significance level (often denoted as α), which represents the maximum probability of rejecting the null hypothesis when it is actually true (Type I error). A common choice is α = 0.05, indicating a 5% chance of a Type I error.

- Calculating the Test Statistic: Compute a value that quantifies the relationship or difference between variables, such as a mean difference or correlation coefficient.

- Determining the Critical Region: Identify the critical values or regions of the test statistic that would lead to rejection of the null hypothesis. These values are determined based on the chosen significance level and the properties of the test statistic.

- Interpreting the Results: Compare the calculated test statistic to the critical values. If the test statistic falls within the critical region, the null hypothesis is rejected in favor of the alternative. If not, the null hypothesis is retained.

It is important to note that hypothesis testing is a probabilistic approach, meaning it provides evidence but does not offer absolute certainty. The results of a hypothesis test are interpreted in the context of the chosen significance level, and the conclusions are valid only for the specific population and sample used in the test.

Tip 1: Choosing the Right Test for Your Data

One of the most critical aspects of hypothesis testing is selecting the appropriate statistical test. The choice of test depends on various factors, including the nature of the data (categorical or continuous), the research question, and the specific relationships being investigated. Using the wrong test can lead to inaccurate conclusions and misinterpretations of the data.

Here are some common tests and their typical applications:

| Test | Description | Common Use Cases |

|---|---|---|

| Independent Samples t-test | Compares the means of two independent groups. | Testing whether the average height differs between males and females. |

| Paired Samples t-test | Compares the means of two related or dependent groups. | Evaluating the difference in test scores before and after a training program. |

| One-Way ANOVA | Analyzes the difference in means between three or more independent groups. | Determining if the average income varies across different educational levels. |

| Chi-Square Test of Independence | Assesses the relationship between two categorical variables. | Investigating whether there is an association between smoking status and lung cancer. |

| Pearson's Correlation Coefficient | Measures the linear relationship between two continuous variables. | Examining the correlation between study hours and exam scores. |

When selecting a test, consider the following:

- Data Type: Ensure the test aligns with the nature of your data. For example, t-tests are appropriate for continuous data, while chi-square tests are suitable for categorical data.

- Research Question: Understand the specific research question or hypothesis you want to test. Different tests are designed to address different types of questions.

- Sample Size: Some tests, like t-tests, may have assumptions about the distribution of the data or require a minimum sample size. Ensure your sample size is adequate for the chosen test.

- Statistical Assumptions: Each test has specific assumptions about the data, such as normality, independence, or equal variance. Verify that your data meets these assumptions.

Tip 2: Understanding Type I and Type II Errors

In hypothesis testing, errors can occur when making decisions based on the test results. These errors are classified into two types: Type I errors and Type II errors.

A Type I error occurs when the null hypothesis is rejected when it is actually true. In other words, it is the error of concluding that an effect or relationship exists when, in reality, there is none. This type of error is often associated with false positives and is controlled by the chosen significance level (α). By setting α to a low value, such as 0.05, researchers reduce the likelihood of a Type I error.

On the other hand, a Type II error occurs when the null hypothesis is accepted when it is actually false. This error represents a failure to detect a genuine effect or relationship. The probability of a Type II error is denoted as β, and it is influenced by factors such as sample size, effect size, and the chosen significance level. Reducing β typically requires a larger sample size or a more powerful statistical test.

It is important to strike a balance between controlling Type I and Type II errors. While reducing Type I errors is often prioritized by setting a low significance level, it is also crucial to consider the practical implications of Type II errors. In some cases, failing to detect a real effect (Type II error) may have more severe consequences than a false positive (Type I error). Therefore, researchers must carefully consider the potential costs and benefits of each type of error in their specific context.

Tip 3: Interpreting P-values Correctly

The p-value, short for “probability value,” is a fundamental concept in hypothesis testing. It represents the probability of obtaining a test statistic as extreme as the one calculated, assuming the null hypothesis is true. In other words, the p-value quantifies the evidence against the null hypothesis.

When interpreting p-values, it is crucial to understand that they do not directly indicate the probability of the null hypothesis being true or false. Instead, they provide information about the strength of the evidence against the null hypothesis. A low p-value (typically less than 0.05) suggests that the data is unlikely to have occurred under the null hypothesis, indicating stronger evidence against it.

However, it is essential to avoid the common misinterpretation of p-values as absolute measures of certainty. A p-value of 0.04 does not mean there is a 4% chance that the null hypothesis is true; rather, it indicates that the data is consistent with the null hypothesis only 4% of the time. This distinction is critical in maintaining a nuanced understanding of statistical evidence.

Additionally, p-values should not be used in isolation to make decisions. They should be considered alongside other factors, such as effect size, practical significance, and the specific research context. A small p-value may indicate statistical significance, but it may not always translate to practical or meaningful implications in the real world.

Conclusion

Hypothesis testing is a powerful tool for drawing meaningful conclusions from data, but it requires a deep understanding of the process and its nuances. By choosing the appropriate statistical test, considering the implications of Type I and Type II errors, and interpreting p-values correctly, researchers can make more informed decisions and enhance the validity of their findings. As with any statistical technique, continuous learning and a critical mindset are essential for mastering hypothesis testing and contributing to the advancement of knowledge in various fields.

What is the significance level (α) in hypothesis testing, and why is it important to choose it carefully?

+The significance level, denoted as α, represents the maximum probability of rejecting the null hypothesis when it is actually true (Type I error). Choosing α carefully is crucial because it determines the balance between Type I and Type II errors. A lower α reduces the chance of Type I errors, but it may increase the risk of Type II errors. Researchers must consider the specific research context and the potential consequences of each type of error to set an appropriate significance level.

How can I determine the power of a statistical test, and why is it important to consider power in hypothesis testing?

+The power of a statistical test refers to its ability to detect a true effect or relationship when it exists. It is influenced by factors such as sample size, effect size, and the chosen significance level. Higher power indicates a lower likelihood of committing a Type II error. Researchers should aim for sufficient power in their studies to increase the chances of detecting genuine effects, ensuring that meaningful findings are not overlooked due to inadequate sample sizes or test sensitivity.

Are there any alternative approaches to hypothesis testing, and when might they be preferable?

+While hypothesis testing is a widely used and powerful approach, alternative methods exist, such as Bayesian hypothesis testing and non-parametric tests. Bayesian testing offers a different perspective by providing the probability of the null hypothesis being true based on prior knowledge and the observed data. Non-parametric tests, on the other hand, are suitable for data that does not meet the assumptions of parametric tests. Researchers should consider these alternatives when their data or research objectives align better with these alternative methods.