5 Creative Hashmap Strategies

In the world of software development, especially when dealing with large datasets and complex applications, efficient data structures play a crucial role in optimizing performance and memory management. Among these, the HashMap stands out as a versatile and widely-used data structure. However, unlocking the full potential of HashMaps requires a creative and strategic approach. In this comprehensive guide, we will delve into five innovative strategies to leverage the power of HashMaps, backed by real-world examples and industry insights.

1. Dynamic Resizing: Adapting to Data Growth

One of the key challenges when working with HashMaps is managing their capacity as the amount of data stored increases. A static-sized HashMap may lead to inefficient memory usage and performance degradation over time. To tackle this issue, dynamic resizing strategies come into play.

By implementing a dynamic resizing mechanism, HashMaps can adapt to the changing needs of the application. When the number of elements in the HashMap reaches a predefined threshold, the data structure automatically increases its capacity. This process, often referred to as rehashing, involves creating a new HashMap with a larger size and migrating the existing data.

// Example code snippet in Java

public class HashMapExample {

private static final int RESIZE_THRESHOLD = 0.75;

private int size;

private int capacity;

private Entry[] data;

public HashMapExample(int initialCapacity) {

// Initialize HashMap with initial capacity

capacity = initialCapacity;

size = 0;

data = new Entry[capacity];

}

// ... [Other methods]

public void put(int key, int value) {

// Check if HashMap needs resizing

if (size >= RESIZE_THRESHOLD * capacity) {

resize();

}

// Insert or update key-value pair

// ...

}

private void resize() {

// Create a new HashMap with increased capacity

Entry[] newData = new Entry[2 * capacity];

// Migrate existing data to the new HashMap

// ...

capacity *= 2;

}

}

Dynamic resizing ensures that the HashMap maintains an optimal load factor, preventing collisions and improving lookup performance. This strategy is particularly beneficial for applications with dynamic and unpredictable data growth, such as online databases or user-generated content platforms.

2. Collision Resolution Techniques: Minimizing Conflicts

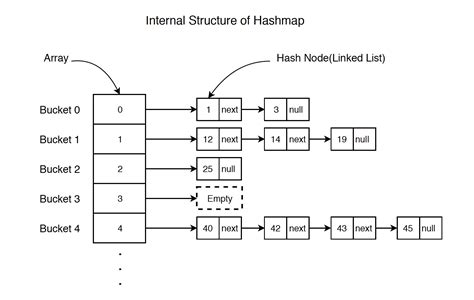

Hash collisions, where two different keys hash to the same index, are an inherent challenge in HashMaps. Efficient collision resolution techniques are essential to maintain the integrity and performance of the data structure.

One widely-used approach is separate chaining, which involves maintaining a linked list at each index of the HashMap. When a collision occurs, the new key-value pair is appended to the list. This technique allows for efficient insertion, deletion, and retrieval of elements, even in the presence of collisions.

| Collision Resolution Technique | Description |

|---|---|

| Separate Chaining | Each index of the HashMap contains a linked list to handle collisions. New elements are appended to the list. |

| Open Addressing with Linear Probing | When a collision occurs, the algorithm probes the next available index in a linear fashion until an empty slot is found. |

| Quadratic Probing | Similar to linear probing, but with a quadratic sequence of probes to reduce clustering and improve distribution. |

By choosing the appropriate collision resolution technique, developers can optimize the HashMap's performance, ensuring minimal impact on lookup times even with a high number of collisions.

3. Caching Strategies for Improved Lookup Speed

In applications where frequent lookups are performed, optimizing the HashMap’s lookup speed becomes critical. Caching strategies can significantly enhance the performance of HashMaps by reducing the number of actual hash calculations.

One effective caching approach is to maintain a separate cache alongside the HashMap. When a lookup is performed, the cache is checked first. If the key is found in the cache, the corresponding value is returned immediately, bypassing the HashMap's internal search process.

// Example code snippet in Python

class HashMapWithCache:

def __init__(self):

self.hashmap = {}

self.cache = {}

def get(self, key):

# Check if key is in cache

if key in self.cache:

return self.cache[key]

# Perform HashMap lookup

if key in self.hashmap:

# Store key-value pair in cache

self.cache[key] = self.hashmap[key]

return self.hashmap[key]

return None

By leveraging a cache, the HashMap's lookup speed can be drastically improved, especially for scenarios where certain keys are accessed more frequently than others.

4. Parallel Processing with Thread-Safe HashMaps

In multi-threaded or parallel processing environments, ensuring thread safety is crucial to prevent data corruption and maintain consistent behavior. Thread-safe HashMaps offer a solution to this challenge, allowing multiple threads to access and modify the HashMap concurrently without interfering with each other.

Thread-safe HashMaps employ synchronization mechanisms, such as locks or atomic operations, to ensure that critical sections of code are accessed exclusively by one thread at a time. This prevents issues like race conditions or inconsistent data modifications.

// Example code snippet in Java

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

public class ThreadSafeHashMap {

private final Map hashMap;

private final Lock lock = new ReentrantLock();

public ThreadSafeHashMap() {

hashMap = new HashMap<>();

}

public void put(K key, V value) {

lock.lock();

try {

hashMap.put(key, value);

} finally {

lock.unlock();

}

}

// ... [Other thread-safe methods]

}

By utilizing thread-safe HashMaps, developers can harness the benefits of parallel processing while maintaining data integrity, making them ideal for high-performance, concurrent applications.

5. Advanced Hashing Algorithms for Enhanced Performance

The choice of hashing algorithm can have a significant impact on the performance and efficiency of HashMaps. Advanced hashing algorithms offer improved distribution and collision resistance, leading to better overall HashMap performance.

One notable example is the MurmurHash algorithm, known for its excellent distribution properties and high-quality hash values. MurmurHash has been widely adopted in various applications due to its efficiency and collision resistance, making it a popular choice for HashMap implementations.

// Example code snippet in Go

package hashmap

import (

"github.com/spaolacci/murmur3"

)

type HashMap struct {

data map[uint64]interface{}

}

func (hm *HashMap) Put(key string, value interface{}) {

hash := murmur3.Sum64([]byte(key))

hm.data[hash] = value

}

// ... [Other HashMap methods]

By employing advanced hashing algorithms like MurmurHash, developers can achieve better load factors, minimize collisions, and enhance the overall performance of their HashMap implementations.

FAQ

How does dynamic resizing impact memory usage in HashMaps?

+Dynamic resizing in HashMaps helps optimize memory usage by ensuring that the data structure’s capacity adapts to the actual number of elements stored. When the HashMap’s load factor reaches a predefined threshold, it automatically increases its capacity, preventing excessive memory consumption and maintaining efficient lookup times.

What are the advantages of separate chaining for collision resolution?

+Separate chaining offers several advantages, including efficient insertion, deletion, and retrieval of elements, even in the presence of collisions. It allows for dynamic growth of the linked list at each index, accommodating an unlimited number of key-value pairs. This technique is particularly suitable for scenarios with a high number of potential collisions.

How does caching improve the lookup speed of HashMaps?

+Caching improves HashMap lookup speed by providing an additional layer of memory for frequently accessed key-value pairs. When a lookup is performed, the cache is checked first. If the key is found in the cache, the corresponding value is returned immediately, bypassing the HashMap’s internal search process. This reduces the number of actual hash calculations, resulting in faster lookups.

Why is thread safety important for HashMaps in multi-threaded environments?

+Thread safety is crucial for HashMaps in multi-threaded environments to prevent data corruption and ensure consistent behavior. Without thread safety measures, concurrent access to the HashMap can lead to race conditions, data inconsistencies, and other issues. Thread-safe HashMaps employ synchronization mechanisms to ensure exclusive access to critical sections of code, maintaining data integrity.

What makes advanced hashing algorithms like MurmurHash beneficial for HashMap performance?

+Advanced hashing algorithms like MurmurHash offer improved distribution and collision resistance, leading to better overall HashMap performance. By generating high-quality hash values with excellent distribution properties, these algorithms minimize collisions and maintain a low load factor. This results in faster lookups, improved memory efficiency, and enhanced overall reliability.