How to Extract Table Data from Websites

In today's digital landscape, web scraping has become an essential tool for extracting valuable data from websites. Among various web scraping techniques, extracting table data stands out as a crucial task for researchers, data analysts, and businesses alike. Tables often contain structured information, making them a goldmine for insights and analysis. This comprehensive guide will delve into the intricacies of extracting table data from websites, covering various methods, best practices, and potential challenges.

Understanding Table Data Extraction

Table data extraction involves retrieving structured information presented in tabular form from websites. Tables are ubiquitous on the web, offering concise, organized data on a wide range of topics. From product catalogs and price comparisons to research papers and statistical reports, tables are a convenient way to present information. Extracting these tables allows users to analyze, manipulate, and repurpose data for various purposes.

Why Extract Table Data from Websites?

The ability to extract table data from websites offers numerous advantages:

- Data Analysis: Extracted table data can be easily imported into spreadsheet software or data analysis tools, enabling deeper insights and visualization.

- Research and Comparison: Researchers can quickly gather data from multiple sources, making comparisons and identifying trends more efficient.

- Content Aggregation: Websites often contain valuable, unique data. Extracting tables allows content aggregators to create comprehensive datasets.

- Product Research: E-commerce businesses can extract product specifications, prices, and reviews from competitor websites to inform their strategies.

- Web Monitoring: Tracking price changes, stock levels, or news updates in table format enables businesses to stay informed and react promptly.

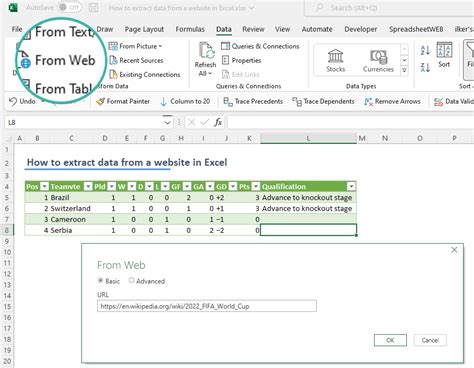

Methods for Extracting Table Data

Several methods are available for extracting table data from websites, each with its own advantages and considerations. Here are some of the most popular approaches:

1. Manual Copy-Paste

The simplest method is to manually copy and paste table data from a website into a spreadsheet or text editor. While this approach is straightforward, it is time-consuming and error-prone, especially for large datasets.

2. Web Scraping with HTML Parsing

Web scraping involves writing code to automatically extract data from websites. HTML parsing, a key technique in web scraping, allows you to extract table data by identifying the relevant HTML tags. By examining the website’s source code, you can locate and extract table data using programming languages like Python or JavaScript.

Here’s an example of HTML code for a table:

Header 1

Header 2

Data 1

Data 2

Data 3

Data 4

3. API Integration

Some websites offer APIs (Application Programming Interfaces) that allow developers to access their data programmatically. If the target website provides an API, you can use it to retrieve table data efficiently and reliably. However, not all websites offer public APIs, and access may be restricted or require authentication.

4. Web Scraping Tools

Web scraping tools provide graphical interfaces for extracting data from websites without writing code. These tools allow users to select and extract table data by visually interacting with the website. While convenient, these tools may have limitations in handling complex websites or dynamic content.

Best Practices for Table Data Extraction

To ensure successful and ethical table data extraction, consider the following best practices:

1. Respect Website Policies

Always review the target website’s terms of service and robot.txt file to ensure your scraping activities are permitted. Some websites explicitly disallow scraping, and violating their policies can lead to legal consequences.

2. Throttle Scraping Speed

Extract data at a reasonable speed to avoid overwhelming the target website’s servers. Rapid scraping can cause performance issues for the website and may result in your IP address being blocked.

3. Handle Dynamic Content

Many websites use dynamic content, which can make table data extraction more challenging. Ensure your scraping method can handle JavaScript-rendered tables and dynamically loaded content.

4. Maintain Data Integrity

During extraction, ensure data integrity by preserving the structure and relationships of the table. Pay attention to row and column headers, and handle merged cells or complex table layouts correctly.

5. Store Data Efficiently

Consider the most suitable data format for your extracted table data. CSV (Comma-Separated Values) or JSON (JavaScript Object Notation) are popular choices, offering a balance between simplicity and flexibility.

Challenges and Considerations

Table data extraction can present several challenges, including:

1. Website Structure Complexity

Some websites have intricate HTML structures, making it difficult to identify and extract table data accurately. Advanced HTML parsing techniques or custom scraping scripts may be required.

2. Dynamic Content Rendering

Tables rendered using JavaScript or AJAX calls can be challenging to extract. Ensure your scraping method can handle dynamic content to avoid missing critical data.

3. Anti-Scraping Measures

Some websites employ anti-scraping measures, such as CAPTCHAs or IP blocking, to prevent automated data extraction. Be prepared to handle these obstacles or consider alternative data sources.

4. Data Quality and Consistency

Extracted data may contain errors or inconsistencies. Implement data validation and cleaning processes to ensure the accuracy and reliability of your dataset.

Real-World Applications and Use Cases

Table data extraction finds applications in various domains:

1. E-commerce

Online retailers can extract product specifications, prices, and reviews from competitor websites to inform their pricing strategies, product offerings, and marketing campaigns.

2. Research and Academia

Researchers can gather and analyze large datasets from various sources, facilitating comparative studies, meta-analyses, and literature reviews.

3. News and Media

Media organizations can extract and analyze data from news articles, enabling them to identify trends, generate insights, and create data-driven narratives.

4. Financial Services

Financial institutions can track stock prices, currency exchange rates, and market trends by extracting table data from financial websites and news sources.

Performance Analysis and Benchmarking

When choosing a table data extraction method, consider the following factors to ensure optimal performance and efficiency:

Speed and Scalability

Evaluate the extraction method’s speed, especially when dealing with large datasets. Consider the scalability of the approach to handle increasing data volumes.

Accuracy and Consistency

Assess the method’s ability to extract data accurately, paying attention to row and column headers, merged cells, and complex table structures.

Ease of Use

For non-technical users, the simplicity and user-friendliness of the extraction method are crucial. Consider whether a tool requires coding skills or offers a graphical interface.

Reliability and Maintenance

Ensure the chosen method is reliable and well-maintained. Regular updates and active community support can indicate the method’s long-term viability.

Future Implications and Innovations

The field of web scraping and data extraction is constantly evolving. Here are some potential future developments and trends:

1. AI-Powered Extraction

Advancements in artificial intelligence and machine learning may lead to more sophisticated extraction methods, capable of handling complex website structures and dynamic content.

2. Cloud-Based Scraping Services

Cloud-based scraping services could offer scalable and efficient data extraction, making it easier for businesses to access and analyze web data without maintaining their own infrastructure.

3. Regulatory Changes

Changes in data privacy and copyright laws may impact the legality of web scraping practices. Staying informed about regulatory changes is essential for ethical and compliant data extraction.

4. Ethical Considerations

As web scraping becomes more prevalent, ethical considerations will gain prominence. Responsible data extraction practices, including respecting website policies and ensuring data privacy, will become increasingly important.

Conclusion

Extracting table data from websites is a powerful technique with wide-ranging applications. By understanding the various methods, best practices, and potential challenges, you can harness the potential of web scraping to gain valuable insights from structured data. As the field evolves, staying updated with the latest technologies and ethical considerations will ensure your data extraction practices remain effective and responsible.

How can I ensure my web scraping activities are ethical and legal?

+To ensure ethical and legal web scraping, always review the target website’s terms of service and robot.txt file. Respect any restrictions or limitations they impose. Additionally, be mindful of data privacy laws and avoid extracting personally identifiable information without consent.

What are some common challenges when extracting table data from dynamic websites?

+Dynamic websites often render tables using JavaScript or AJAX calls. To overcome this challenge, you may need to use browser automation tools or techniques like rendering the website in a headless browser to capture the dynamic content accurately.

Are there any tools or libraries specifically designed for table data extraction?

+Yes, several tools and libraries exist for table data extraction. Examples include BeautifulSoup, a Python library for HTML parsing, and Table Extractor, a web scraping tool specifically designed for extracting tables from websites.

How can I handle websites that employ anti-scraping measures like CAPTCHAs or IP blocking?

+If a website employs CAPTCHAs or IP blocking, you may need to consider alternative data sources or methods. Some websites offer APIs that can provide access to their data without the need for web scraping. Alternatively, you can use proxy servers or rotating IP addresses to mitigate IP blocking.