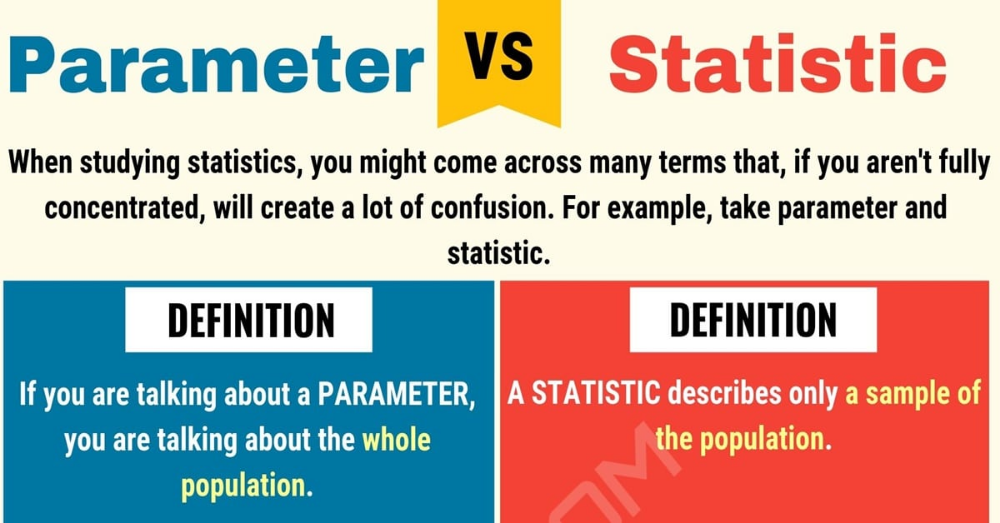

Two Essential Ways: Parameters vs Statistics

In the world of data analysis and machine learning, two fundamental concepts often come into play: parameters and statistics. These terms, while seemingly similar, serve distinct purposes and play crucial roles in various stages of the data science pipeline. Understanding the difference between parameters and statistics is essential for anyone venturing into the field, as it forms the basis for accurate analysis, model building, and decision-making.

This comprehensive guide aims to explore these two concepts, delving into their definitions, applications, and the unique insights they offer. By the end of this article, readers will not only grasp the theoretical distinctions but also appreciate the practical implications of parameters and statistics in real-world scenarios.

Parameters: The Adjustable Knobs of Machine Learning

Parameters, in the context of machine learning, refer to the adjustable values or settings within a model. They are the "knobs" that data scientists and machine learning engineers tweak to optimize the performance of their algorithms. These parameters are typically set during the training phase of a model and remain fixed once the model is deployed.

Types of Parameters

There are several types of parameters, each serving a specific purpose in the model-building process. Here are some of the most common types:

- Model Parameters: These are the core parameters that define the behavior of a model. For instance, in a linear regression model, the slope and intercept are model parameters. In a neural network, the weights and biases are model parameters.

- Hyperparameters: Hyperparameters are the settings that control the learning process of a model. They are not learned from the data but are set manually before training. Examples include learning rate, regularization strength, and the number of hidden layers in a neural network.

- Optimization Parameters: These parameters are related to the optimization algorithm used to train the model. They influence how the model updates its parameters during training. Examples include the optimization algorithm itself (e.g., stochastic gradient descent, Adam), and parameters like momentum and batch size.

Setting Parameters

The process of setting parameters, especially hyperparameters, is often an iterative and experimental process. Data scientists may start with default values or values suggested by domain knowledge and then fine-tune them based on the performance of the model on validation data. Techniques like grid search or Bayesian optimization are commonly used to find the optimal set of hyperparameters.

Example: Linear Regression

Consider a simple linear regression model, which is used to predict a continuous outcome based on one or more input features. The equation for a linear regression model is:

$$y = \beta_0 + \beta_1x_1 + \beta_2x_2 + ... + \beta_nx_n$$

Here, $y$ is the predicted outcome, $x_1, x_2, ..., x_n$ are the input features, and $\beta_0, \beta_1, ..., \beta_n$ are the model parameters. The $\beta$ values represent the coefficients that determine the contribution of each input feature to the predicted outcome.

In this example, $\beta_0$ is the intercept (the predicted value when all input features are zero), and $\beta_1, ..., \beta_n$ are the slopes for each respective input feature. These parameters are learned during the training process by minimizing the difference between the predicted and actual outcomes.

Statistics: Quantifying Data Insights

Statistics, on the other hand, are numerical measures derived from data. They provide valuable insights into the characteristics of a dataset, helping analysts understand patterns, trends, and relationships. Unlike parameters, statistics are not adjustable; they are calculated from the data and often serve as input for models or as indicators for decision-making.

Types of Statistics

Statistics can be broadly categorized into two types: descriptive statistics and inferential statistics.

- Descriptive Statistics: These are measures that summarize the main characteristics of a dataset. Examples include mean, median, mode, standard deviation, and correlation coefficients. Descriptive statistics help describe the central tendency, variability, and relationships within the data.

- Inferential Statistics: Inferential statistics involve using sample data to make generalizations about a larger population. Techniques like hypothesis testing, confidence intervals, and regression analysis fall under this category. Inferential statistics are used to draw conclusions and make predictions based on limited data.

Calculating Statistics

Statistics are calculated using various mathematical formulas and algorithms. For example, the mean of a dataset is calculated by summing all the values and dividing by the number of values. The standard deviation measures the amount of variation or dispersion of a set of values from the average value, and it is calculated using the square root of the variance, which is the average of the squared differences from the mean.

Example: Student Performance Analysis

Imagine a school administrator analyzing the performance of students in a particular subject. They collect data on the students' test scores, attendance, and study hours. The administrator calculates the following statistics:

| Statistic | Value |

|---|---|

| Mean Test Score | 75% |

| Standard Deviation of Test Scores | 8% |

| Correlation between Study Hours and Test Scores | 0.65 |

These statistics provide valuable insights. The mean test score gives an overall idea of the students' performance, while the standard deviation indicates how spread out the scores are. The correlation coefficient suggests a positive relationship between study hours and test scores, indicating that students who study more tend to perform better.

The Interplay Between Parameters and Statistics

While parameters and statistics serve different purposes, they often work hand in hand in the data science workflow. Statistics are used to understand the data and guide the selection of appropriate models and parameters. For instance, the choice of a linear regression model in the example above was based on the assumption that the relationship between the input features and the outcome is linear, which can be supported by statistical analysis.

Once a model is chosen, its parameters are optimized to fit the data. These optimized parameters can then be used to make predictions on new, unseen data. Statistics can also be calculated on the predicted values to evaluate the model's performance and its agreement with the actual data.

Parameter Estimation

Parameter estimation is a critical step in model building. It involves finding the optimal values for the model parameters that minimize the difference between the predicted and actual outcomes. This process is often iterative and can be computationally intensive, especially for complex models with many parameters.

Various optimization algorithms are used for parameter estimation, such as gradient descent, which iteratively adjusts the parameters based on the gradient of the error function. Regularization techniques are often employed to prevent overfitting and improve the generalization ability of the model.

Model Evaluation and Selection

Statistics play a crucial role in evaluating the performance of different models and selecting the best one for a given task. Metrics like mean squared error, R-squared, and accuracy are used to compare models and assess their effectiveness. Cross-validation techniques, which involve splitting the data into multiple subsets and training and evaluating the model on each subset, provide a more robust estimate of a model's performance.

Real-World Applications

The distinction between parameters and statistics is not merely an academic concept; it has far-reaching implications in various industries and applications.

Healthcare

In healthcare, parameters are used to train models that predict disease outcomes based on patient data. For instance, a model might use parameters like age, gender, and medical history to predict the likelihood of a patient developing a particular condition. Statistics, on the other hand, can be used to analyze the effectiveness of treatments, track the spread of diseases, and identify risk factors.

Finance

Financial institutions use parameters to build models that predict stock prices, interest rates, and credit risk. These models are then used to make investment decisions and manage risk. Statistics, such as historical price trends and volatility, are crucial in understanding market behavior and making informed financial decisions.

Marketing

In marketing, parameters are adjusted to optimize advertising campaigns. For example, in targeted advertising, parameters like budget, demographics, and interests are used to reach the right audience. Statistics, such as click-through rates and conversion rates, are analyzed to evaluate the effectiveness of campaigns and make data-driven decisions.

Conclusion

Parameters and statistics are fundamental concepts in data analysis and machine learning. While parameters are adjustable values that define a model's behavior, statistics are numerical measures derived from data that provide insights into its characteristics. The interplay between these two concepts is vital for building effective models, making accurate predictions, and gaining valuable insights from data.

As data science continues to evolve and play an increasingly crucial role in various industries, a deep understanding of parameters and statistics will remain essential for data professionals. By grasping these concepts and their applications, practitioners can harness the power of data to drive innovation and make informed decisions.

What is the difference between a parameter and a statistic in machine learning?

+A parameter is an adjustable value or setting within a model that is optimized during the training process. It defines the behavior of the model and remains fixed once the model is deployed. In contrast, a statistic is a numerical measure derived from data, providing insights into the characteristics of a dataset. Statistics are calculated from the data and are not adjustable.

How are parameters set in machine learning models?

+Parameters are typically set during the training phase of a model. Data scientists may start with default values or values suggested by domain knowledge and then fine-tune them based on the performance of the model on validation data. Techniques like grid search or Bayesian optimization are commonly used to find the optimal set of parameters.

What role do statistics play in data analysis and decision-making?

+Statistics provide valuable insights into the characteristics of a dataset. They help describe the central tendency, variability, and relationships within the data. By analyzing statistics, analysts can make informed decisions, draw conclusions, and predict outcomes based on limited data.

How are parameters and statistics interconnected in the data science workflow?

+Statistics are used to understand the data and guide the selection of appropriate models and parameters. Once a model is chosen, its parameters are optimized to fit the data. The optimized parameters can then be used to make predictions on new, unseen data. Statistics are also calculated on the predicted values to evaluate the model’s performance.

Can you provide an example of how parameters and statistics are used in real-world applications?

+In healthcare, parameters are used to train models that predict disease outcomes based on patient data. For instance, a model might use parameters like age and medical history to predict the likelihood of a patient developing a particular condition. Statistics, such as the mean and standard deviation of patient data, can be used to analyze treatment effectiveness and identify risk factors.