Character Translation with PyTorch's LSTM

In the world of natural language processing (NLP), character-level language models have gained significant attention due to their ability to capture fine-grained linguistic patterns. Among the various deep learning architectures, Long Short-Term Memory (LSTM) networks have proven to be particularly effective for character-level tasks. In this comprehensive guide, we will delve into the fascinating realm of character translation using PyTorch's LSTM, exploring its potential and practical applications.

Understanding Character Translation

Character translation, also known as character-level sequence-to-sequence (seq2seq) modeling, involves converting an input sequence of characters into a corresponding output sequence. Unlike word-level translation, which focuses on translating words or phrases, character translation operates at a more granular level, considering individual characters as the basic unit of analysis. This approach enables the model to capture intricate linguistic structures and generate highly flexible outputs.

Character translation finds its applications in various NLP tasks, including machine translation, text generation, spelling correction, and even creative writing. By training an LSTM model on character-level data, we can achieve impressive results, producing coherent and contextually appropriate sequences of characters.

The Power of PyTorch’s LSTM

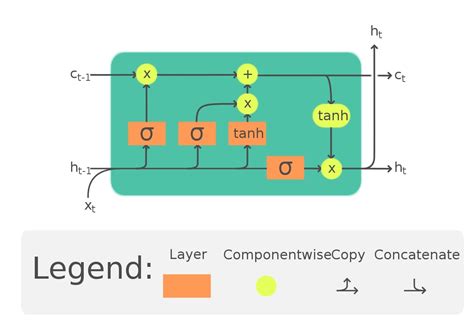

PyTorch, an open-source machine learning library, provides a rich set of tools and functionalities for building and training deep learning models. Among its offerings, the LSTM architecture stands out as a powerful tool for sequential data processing. LSTM networks are a variant of Recurrent Neural Networks (RNNs) designed to address the vanishing gradient problem, making them well-suited for learning long-term dependencies in sequential data.

PyTorch's implementation of LSTM offers a straightforward and flexible approach to building character translation models. It allows developers to easily define the architecture, train the model on large datasets, and fine-tune its parameters to achieve optimal performance. The library's extensive documentation and community support further enhance the development process, providing valuable resources for beginners and experts alike.

Implementing Character Translation with PyTorch’s LSTM

To embark on our character translation journey, we will follow a step-by-step process, leveraging PyTorch’s capabilities to build and train our LSTM model. Here’s an overview of the key steps involved:

Data Preparation

The first step is to prepare our dataset, which consists of pairs of input and output sequences. Each input sequence represents a source text, and the corresponding output sequence is the desired translation or generation. It is crucial to ensure that the dataset is diverse and representative of the target task to avoid bias and overfitting.

During data preparation, we perform tokenization, splitting the input and output sequences into individual characters. This process allows our model to learn the patterns and dependencies between characters. Additionally, we may opt to perform data augmentation techniques to enhance the diversity of our dataset and improve the model's generalization capabilities.

Model Architecture

Next, we define the architecture of our LSTM model using PyTorch’s neural network modules. The model consists of an embedding layer, one or more LSTM layers, and a final linear layer for generating the output sequence. The embedding layer maps each character to a dense vector representation, capturing semantic and syntactic information.

The LSTM layers process the input sequences, capturing long-term dependencies and generating a hidden state representation. These hidden states are then passed through the linear layer to produce the output sequence. The model is trained using a suitable loss function, such as cross-entropy loss, to optimize its parameters and minimize the difference between the predicted and actual output sequences.

Training and Evaluation

With our model architecture defined, we proceed to train the LSTM using the prepared dataset. PyTorch provides efficient training mechanisms, allowing us to utilize GPU acceleration for faster training. We split our dataset into training and validation sets, using the latter to monitor the model’s performance and prevent overfitting.

During training, we feed batches of input sequences to the model, computing the loss and updating the model's parameters using backpropagation and an optimization algorithm, such as Adam. Regularization techniques, such as dropout, may be employed to further improve the model's generalization capabilities. Additionally, we can utilize early stopping to prevent overfitting and ensure efficient training.

To evaluate the model's performance, we use metrics such as perplexity, BLEU score, or other task-specific evaluation metrics. These metrics provide insights into the model's ability to generate coherent and accurate translations.

Generation and Inference

Once our LSTM model is trained and evaluated, we can utilize it for generation and inference tasks. Given an input sequence, the model generates the corresponding output sequence character by character. This process involves feeding the input sequence to the model, retrieving the hidden state, and using it to predict the next character in the output sequence.

During inference, we can employ beam search or other decoding strategies to explore the most likely output sequences and generate high-quality translations. Additionally, we may incorporate techniques such as attention mechanisms to further enhance the model's performance and improve its ability to focus on relevant input regions.

Applications and Real-World Examples

Character translation with PyTorch’s LSTM finds applications in a wide range of NLP tasks. Let’s explore some real-world examples and use cases:

Machine Translation

One of the most prominent applications of character translation is machine translation. By training an LSTM model on parallel corpora, we can achieve impressive results in translating text between different languages. The model learns to capture the linguistic patterns and generate translations at the character level, resulting in more accurate and contextually appropriate translations.

Text Generation

Character translation models can be employed for text generation tasks, such as generating creative stories, poems, or even music lyrics. By providing a seed sequence as input, the model generates a coherent and grammatically correct continuation, allowing for creative and innovative outputs.

Spelling Correction

Character translation can also be utilized for spelling correction tasks. Given an input sequence with spelling errors, the model generates the corrected version, helping to improve the readability and accuracy of text. This application finds utility in various domains, including email filtering, text editing, and content moderation.

Text-to-Speech Synthesis

In the realm of text-to-speech synthesis, character translation models can play a crucial role. By converting text into a sequence of phonemes or characters, the model generates a corresponding audio representation. This application enables the synthesis of high-quality and natural-sounding speech, benefiting various industries, including voice assistants, telecommunications, and entertainment.

Challenges and Future Directions

While character translation with PyTorch’s LSTM has shown remarkable potential, there are still challenges to address and opportunities for improvement. Some of the key considerations include:

- Dataset Size and Quality: Training LSTM models requires large and diverse datasets to achieve optimal performance. Collecting and curating high-quality datasets is crucial for ensuring the model's generalization capabilities and avoiding bias.

- Training Efficiency: Training LSTM models can be computationally expensive, especially for large datasets. Techniques such as distributed training, model pruning, and quantization can be explored to improve training efficiency and reduce resource requirements.

- Model Interpretability: Understanding the inner workings of LSTM models and interpreting their predictions can be challenging. Research in model interpretability and explainability can help shed light on the decision-making process and enhance trust in the model's outputs.

- Multimodal Integration: Integrating character translation models with other modalities, such as images or audio, can open up new avenues for exploration. By combining textual and visual information, we can achieve more contextually rich and accurate translations.

Conclusion

Character translation with PyTorch’s LSTM offers a powerful approach to tackling a wide range of NLP tasks. By leveraging the capabilities of LSTM networks and the flexibility of PyTorch, developers can build sophisticated models that capture intricate linguistic patterns and generate coherent outputs. From machine translation to text generation and spelling correction, the applications of character translation are vast and promising.

As we continue to advance in the field of NLP, character translation with LSTM will likely play a significant role in shaping the future of language understanding and generation. With ongoing research and development, we can expect even more impressive results and innovative applications, pushing the boundaries of what is possible in natural language processing.

How does character translation differ from word-level translation?

+Character translation operates at a more granular level, considering individual characters as the basic unit of analysis. This approach enables the model to capture intricate linguistic patterns and generate highly flexible outputs. In contrast, word-level translation focuses on translating words or phrases, often relying on predefined dictionaries or rules.

What are some common challenges in character translation?

+Common challenges in character translation include handling out-of-vocabulary (OOV) characters, dealing with variable-length sequences, and ensuring the model’s ability to capture long-term dependencies. Additionally, ensuring the model’s generalization capabilities and avoiding overfitting can be challenging, especially with limited training data.

How can we improve the performance of character translation models?

+To improve the performance of character translation models, we can explore techniques such as data augmentation, regularization, and model architecture optimization. Additionally, utilizing larger and more diverse datasets, employing pre-trained language models, and incorporating attention mechanisms can further enhance the model’s capabilities.